Introduction

This article will look at populating an archive of Twitter data into SQL Server 2014. This process can apply to older versions of SQL Server.

Obtaining an NSA™ Approved Twitter Archive

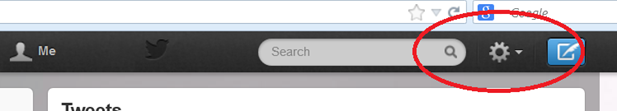

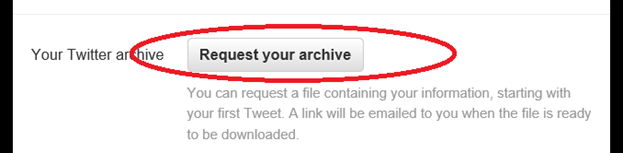

Log onto Twitter using the Web interface (i.e. navigate to http://www.twitter.com). Log in with your account credentials, and then click on the “cog” image on the top right hand side, and select “settings”.

click on “Account” from the left hand side menu and the scroll to the very bottom:

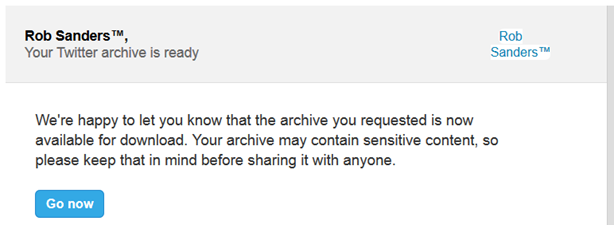

Request an archive of your tweets

You’ll be send an e-mail to your registered e-mail address when the archive is ready to be downloaded. My main Twitter account has nearly 10,000 tweets and was established in January 2007, yet the archive was ready almost instantly. By the time I checked my e-mail account, the link was there.

Reviewing the Archive

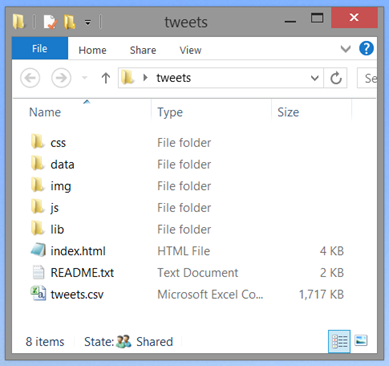

The archive is a zip file and contains some interesting content.

The simplest way to access the archive data is to load the supplied index.html into any standard web browser.

The offline archive is completely searchable, and also has some interesting account information, where you can view your User ID (mine’s in the 600,000 range) as well as the date you joined (for me, January 11th, 2007 in a beautiful DB-compatible format).

But wait.. there’s more

If you examine the archive folder contents more closely, you’ll also discover something extremely handy – a file called tweets.csv. In my case, it is 1.7mb in size. Opening the .csv file, I can discern the following column headings:

| Column Name | Potential Usage | Data Type |

| tweet_id | Unique Identifier of a Tweet? | Numeric |

| in_reply_to_status_id | In the context of someone else’s tweet | Numeric |

| in_reply_to_user_id | Reply to a specific user | Integer |

| timestamp | Date/Time of the tweet | Date |

| source | Origin of the tweet (e.g. web, mobile device) | Unicode String |

| text | Text of the tweet | Unicode String |

| retweeted_status_id | Original tweet (if re-tweeted) | Numeric |

| retweeted_status_user_id | User of the original tweet | Integer |

| retweeted_status_timestamp | Original date.time of the re-tweeted tweet | Date |

| expanded_urls | Original URI (before URL minification) | Unicode String |

Now, for test data purposes, this is a little light – the data comes in at just over 9800 rows, but it should be useful enough for the time being. Remember that Unicode lengths are double-byte, to be safe, I gave the “text” column a precision of 300 (140*2 = 280) just to give some extra space, to be on the safe side.

Importing the Data into SQL Server

First thing I’m going to do is copy the .csv file into my test VM. The rest of this article more or less concerns itself with walking through how to properly use the SQL Server Data Import/Export Tool.

The first thing to do is change the Data Source to “Flat File Source” and then browse for the .csv file. Once you’ve done that you can verify the data by clicking on the columns option from the left hand side nav. Make sure you set the “Text qualifier” field in the “General” options to quotation mark (“) or you’ll get data conversion issues.

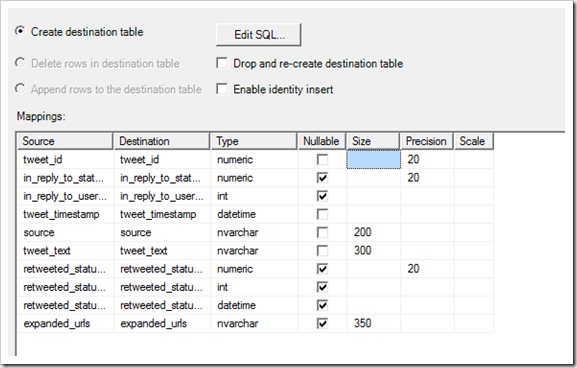

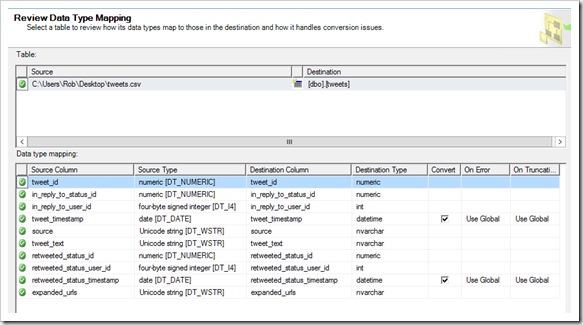

You should click on the “Advanced” option and rename the columns and data types/sizes. I applied the data types listed in the table below, and also set some column lengths/precision. This worked for me, but your experience may differ. If you’re at all unsure, use the “Suggest Types…” wizard to help with selections.

| Column Name | Data Type | SQL Type | Length/Precision | Nullable? |

| tweet_id | numeric | DT_NUMERIC | 20 | No |

| in_reply_to_status_id | numeric | DT_NUMERIC | 20 | Yes |

| in_reply_to_user_id | four-byte signed integer | DT_I4 | Yes | |

| tweet_timestamp | date | DT_DATE | No | |

| source | Unicode string | DT_WSTR | 200 | No |

| tweet_text | Unicode string | DT_WSTR | 300 | No |

| retweeted_status_id | numeric | DT_NUMERIC | 20 | Yes |

| retweeted_status_user_id | four-byte signed integer | DT_I4 | Yes | |

| retweeted_status_timestamp | date | DT_DATE | Yes | |

| expanded_urls | Unicode string | DT_WSTR | 350 | Yes |

NOTE: The columns above in bold I have renamed from the original .csv heading names to avoid using SQL Types as column names.

Select “Preview” to do a quick sanity check. Once satisfied, click next to set the destination options. For these articles, I’ve created a new database called ‘PreviewTest’. When you get to the “source tables” screen, click on the “edit mappings” dialog and just check everything’s set right.

When ready, execute the import as a new SSIS package. This took me about half an hour to get all the data types right, both for the input definition and the target destination.

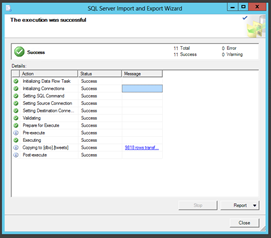

If all goes to plan, you should have rows inserted into a brand new table.

Result!

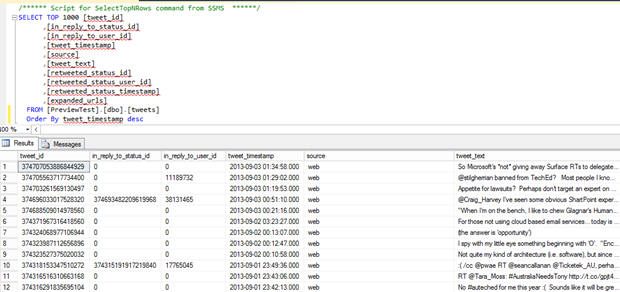

After a successful import, I can now query from the [tweets] table, and see all my glorious Twitter contributions:

Post-Import Tidy Up

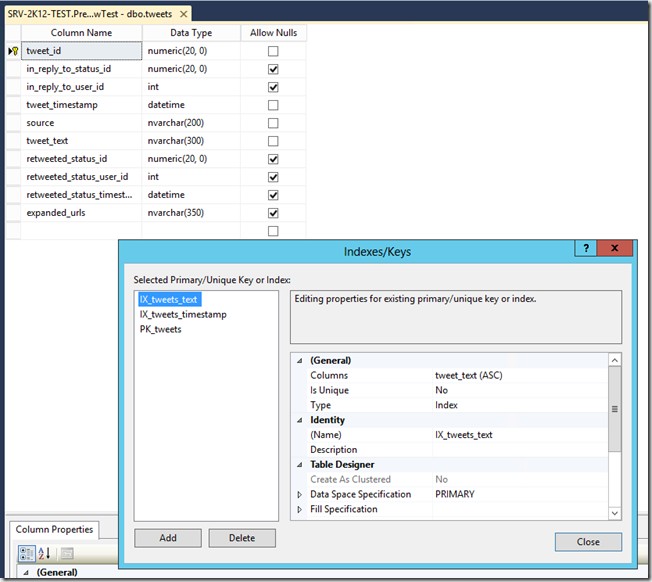

Let’s do some clean-up. Apply the following changes to the table schema:

- Create a non-clustered PK on tweet_id

- Create a clustered index on [timestamp]

- Create a non-clustered index on [text]

Conclusion

This was a fairly painful exercise for me as it turns out I’m rather rusty with using the data import wizard. For those of you who are also rusty, here’s some common (and perhaps in hindsight, obvious) tips:

- If your data is text qualified, make sure you specify the qualifying value

- If you use Unicode types, make sure you specify a double-byte length

- Remember to check column names to avoid using SQL or .NET types as names

- If something doesn’t look right in the Preview pane, your import isn’t likely to run successfully

Check back for my next instalment where I’ll establish a new schema and start to look at what we can do with SQL Server 2014 and the latest version of the Entity Framework.